In a groundbreaking development, researchers at Bristol University have crafted a sophisticated artificial intelligence (AI) system, dubbed Bi-Touch, that enables robots to perform manual tasks with the aid of a digital assistant. The Bristol Robotics Laboratory-based project demonstrates how an AI agent can control robotic behavior through tactile and proprioceptive feedback, interpreting its environment and facilitating precise interaction and object manipulation. The findings, published in the IEEE Robotics and Automation Letters, have significant implications for industries such as agriculture, where fruit picking could be automated, and prosthetics, where touch in artificial limbs could be replicated.

Yijiong Lin, the study’s lead author from the Faculty of Engineering, expressed excitement about the potential of the Bi-Touch system. He explained that AI agents could be trained in a virtual world within a few hours to perform bimanual tasks centered around touch. The agents could then be directly applied to the real world without requiring additional training. He further emphasized that the tactile bimanual agent could handle delicate objects gently and complete tasks despite unexpected perturbations.

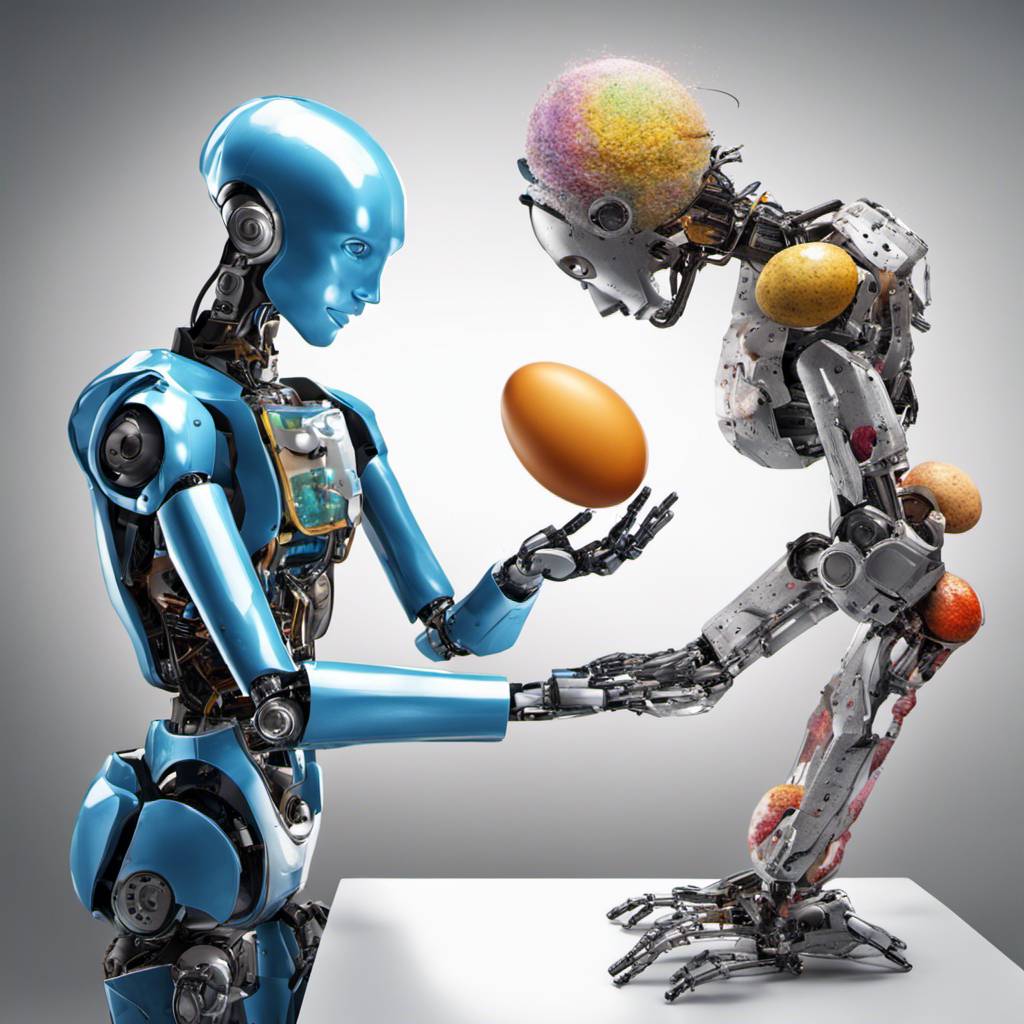

The focus on bimanual manipulation with tactile feedback is a relatively unexplored area in the field of robotics. This is primarily due to the lack of suitable hardware and the complexity involved in designing effective controllers for tasks with large state-action spaces. However, recent advancements in AI and robotic tactile sensing have paved the way for the creation of a tactile dual-arm robotic system.

The team at Bristol University developed a simulation featuring two robot arms equipped with tactile sensors. They designed reward functions and a goal-update mechanism to encourage the robot agents to learn bimanual tasks. The robot learns these skills through Deep Reinforcement Learning, an advanced technique in robot learning that involves trial and error. The robot learns to make decisions by trying different behaviors to complete designated tasks, such as lifting objects without causing damage. Success earns rewards, while failure provides valuable lessons on what actions to avoid.

The AI agent operates without visual input, relying solely on proprioceptive feedback—the ability to sense movement, action, and location—and tactile feedback. This innovative approach is a testament to the power of electronics and programming languages in pushing the boundaries of what is possible in robotics.

Co-author Professor Nathan Lepora lauded the Bi-Touch system as a promising approach that combines affordable software and hardware to learn bimanual behaviors with touch in simulation. He noted that this can be directly applied to real-world scenarios. The team’s development of a tactile dual-arm robot simulation will allow further research on different tasks since the code will be open-source. This is ideal for developing other downstream tasks in the future.

In an era where computers and coding are revolutionizing industries, this breakthrough in AI and robotics represents a significant step forward. The Bi-Touch system not only highlights the potential for automation in various sectors but also underscores the importance of touch in human-robot interaction. As technology continues to evolve, it will be interesting to see how these developments shape our future.