TryHackMe – Advent of Cyber 2023 – Day 7

Day 7 of TryHackMe’s Advent of Cyber 2023 event is focused on using Linux commands to parse event logs. The importance of logs are highlighted, and we get to develop our Linux chops – using commands like grep, cut, sort, uniq, and wc.

By working with these commands to perform a variety of tasks, we become increasingly familiar with how powerful they can be to parse large data sets such as event logs.

My favorite part about Day 7 was the feeling of slowly closing in on the ultimate target, which, in this case, included base64 encoded data that we were required to find, identify, isolate, and decode.

About This Walkthrough/Disclaimer:

In this walkthrough I try to provide a unique perspective into the topics covered by the room. Sometimes I will also review a topic that isn’t covered in the TryHackMe room because I feel it may be a useful supplement.

I try to prevent spoilers by requiring a manual action (highlighting) to obtain all solutions. This way you can follow along without being handed the solution if you don’t want it. Always try to work as hard as you can through every problem and only use the solutions as a last resort.

Walkthrough for TryHackMe Advent of Cyber 2023 – Day 7

Question 1

How many unique IP addresses are connected to the proxy server?

Start up the VM using the green ‘Start Machine’ button at the top of Day 7’s activity text.

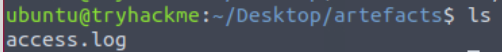

We will be working with the access.log file in the artefacts directory on the Desktop.

If we cat out this file, we will see that it is massive. We can check how many lines are contained within the log file with the wc command with the -l flag:

wc -l access.log

With nearly 50,000 entries, we will need to use some serious linux-fu to parse the data effectively!

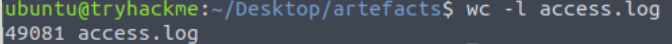

To answer the first question, we need to get the total number of unique IP addresses. There are many ways of doing this, but in this case i decided to start building the command by using cut to select the IP addresses:

cut -d ' ' -f2 access.log Note that I am specifying a delimiter of space ‘ ‘ and a field of 2, which corresponds to the IP addresses.

Next we can pipe the output to sort, followed by uniq. This will provide a list of the unique IP addresses in the file:

cut -d ' ' -f2 access.log | sort | uniq

And if (like me) you’re too lazy to count, you can pipe this to wc -l, which will count the lines for us:

cut -d ' ' -f2 access.log | sort | uniq | wc -lAnswer (Highlight Below):

9

Question 2

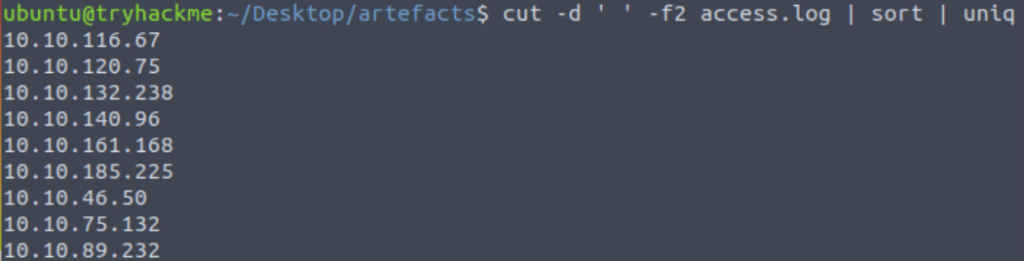

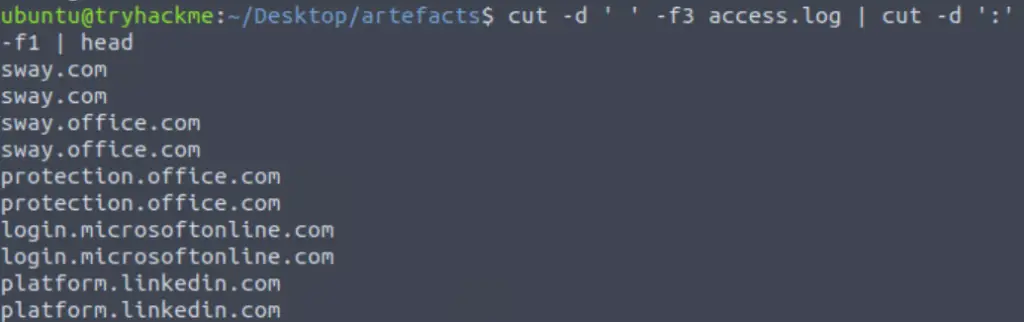

How many unique domains were accessed by all workstations?

To answer this question, first we need to identify the domains contained within the log file. If we look at a log entry, we will see that domain:port is the third field (using a delimiter of a space ‘ ‘). For example in the entry below, the domain is ‘storage.live.com’:

We can therefore cut the domain:port field with the following command:

cut -d ' ' -f3 access.log

This selection includes the port, however. To get the most accurate (and the correct) result, we will need to remove the port number. We can again do this using the cut command, this time using the colon ‘:’ as the delimiter and keeping the first field:

cut -d ' ' -f3 access.log | cut 'd ':' -f1

Now that we have a list of the domains, we can sort it and use uniq to eliminate all duplicates. Then we can use wc -l to get the total:

cut -d ' ' -f3 access.log | cut 'd ':' -f1 | sort | uniq | wc -lAnswer (Highlight Below):

111

Question 3

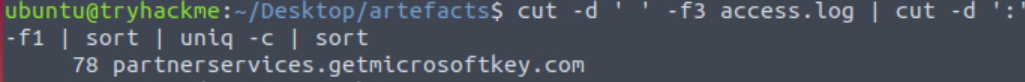

What status code is generated by the HTTP requests to the least accessed domain?

In order to answer this question, first we have to generate a list of the domains and the count for each domain. As with the last example, we can get the list of domains using cut:

cut -d ' ' -f3 access.log | cut 'd ':' -f1We can still use sort and uniq here, but since we want to generate the count for each domain, we will be using -c with uniq:

cut -d ' ' -f3 access.log | cut -d ':' -f1 | sort | uniq -cThe only problem with this is that the list is sorted alphabetically rather than by count. To sort by count, we can use sort again, this time with the -nr option:

cut -d ' ' -f3 access.log | cut -d ':' -f1 | sort | uniq -c | sort

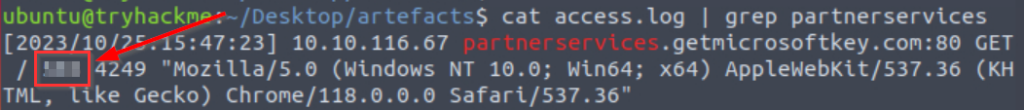

So the least accessed domain is ‘partnerservices.getmicrosoftkey.com’.

Let’s cat the log file and grep for this domain, so that we can see the full entries corresponding to the domain:

cat access.log | grep partnerservices

Answer (Highlight Below):

503

Question 4

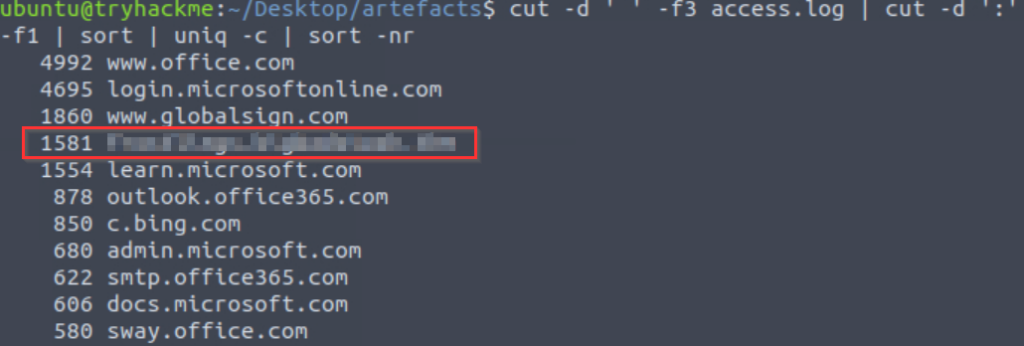

Based on the high count of connection attempts, what is the name of the suspicious domain?

We need to go back the results from the last question to get the list of domains sorted by count:

cut -d ' ' -f3 access.log | cut -d ':' -f1 | sort | uniq -c | sort -nrWhen looking through the output, one domain in particular stands out:

Answer (Highlight Below):

frostlings.bigbadstash.thm

Question 5

What is the source IP of the workstation that accessed the malicious domain?

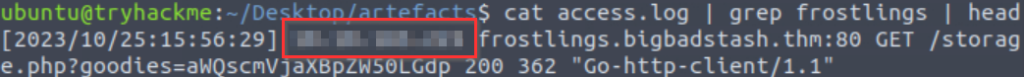

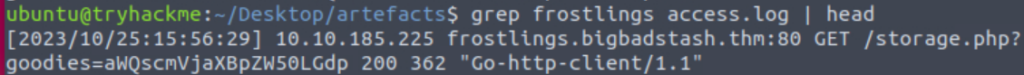

We can get this by grepping for the malicious domain:

grep frostlings access.log

Answer (Highlight Below):

10.10.185.225

Question 6

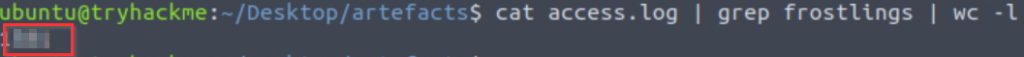

How many requests were made on the malicious domain in total?

All we need to do in order to answer this question is to pipe the output of the grep command from the last question into wc -l in order to get the line count:

grep frostlings access.log | wc -l

Answer (Highlight Below):

1581

Question 7

Having retrieved the exfiltrated data, what is the hidden flag?

Let’s start by looking at the format of the log file entries again:

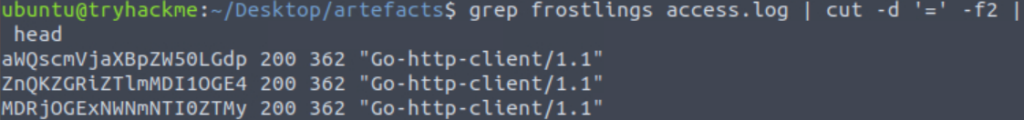

We are trying to access the string that follows the ‘goodies’ parameter. Noting that the string is prefaced by an equal sign ‘=’, I used it as a delimeter to cut by:

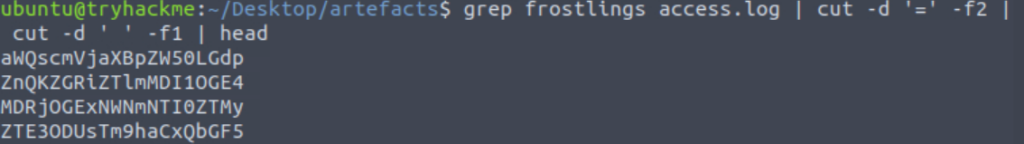

grep frostlings access.log | cut -d '=' -f2

Then we can remove everything else by cutting again using a delimeter of a space ‘ ‘ and keeping the first field:

grep frostlings access.log | cut -d '=' -f2 | cut -d ' ' -f1

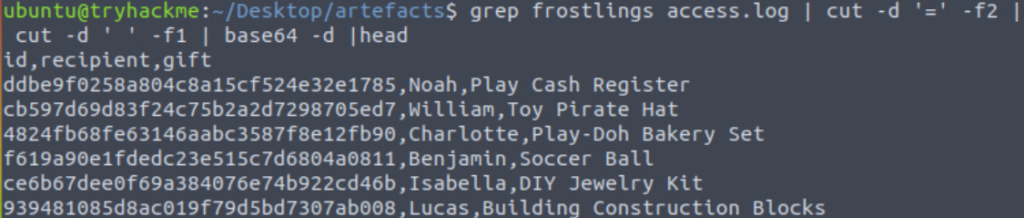

Now we can decode this from base64 using base64 -d:

grep frostlings access.log | cut -d '=' -f2 | cut -d ' ' -f1 | base64 -d

We still have a long list, but it is manually parse-able if we want to manually look through it for the flag. However, this lesson is all about Linux commands so let’s get the terminal to do the hard work for us!

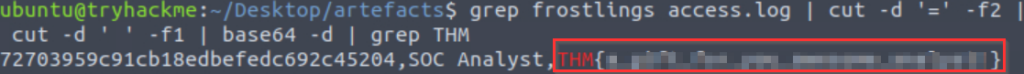

We know the flag will (probably) start with THM, so let’s grep for it:

grep frostlings access.log | cut -d '=' -f2 | cut -d ' ' -f1 | base64 -d | grep THM

We have the flag! But we can do better, can’t we?

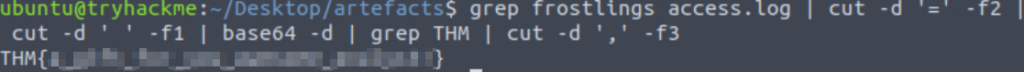

Let’s extract the flag by itself using the cut command. Noting that the flag is prefaced by a comma ‘,’ we can use this as a delimeter and retrieve the third field:

grep frostlings access.log | cut -d '=' -f2 | cut -d ' ' -f1 | base64 -d | grep THM | cut -d ',' -f3

Answer (Highlight Below):

THM{a_gift_for_you_awesome_analyst!}

Conclusion

I sincerely enjoyed Day 7 of the TryHackMe Advent of Cyber 2023 event.

It’s always great to practice fundamental Linux skills, and there are frequently occasions when we may need to parse large data sets for useful data. Having these skills at the ready is essential so that we don’t need to spend hours manually poring through data. At the very least, we can often remove non-essential information in order to make it easier to identify something of value.